Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to see a menu of slides

Minimal Models of the Physical and of the Mental

Processes, Representations & Signature Limits

s.butterly@warwick.ac.uk

There are two (or more) systems for tracking others’ beliefs.

‘there is a paucity of … data to suggest that they ['two systems' approaches] are the only or the best way of carving up the processing’

Adolphs (2010 p. 759)

‘we wonder whether the dichotomous characteristics used to define the two-system models are … perfectly correlated …

[and] whether a hybrid system that combines characteristics from both systems could not be … viable’

Keren and Schul (2009, p. 537)

‘the process architecture of social cognition is still very much in need of a detailed theory’

Adolphs (2010 p. 759)

automatic process

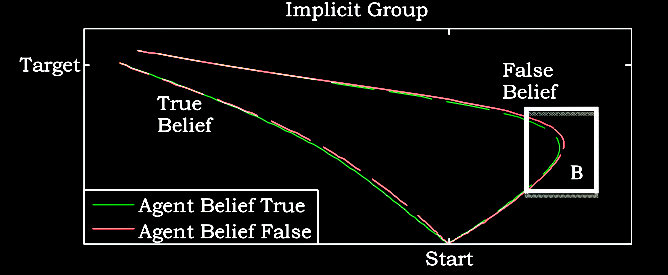

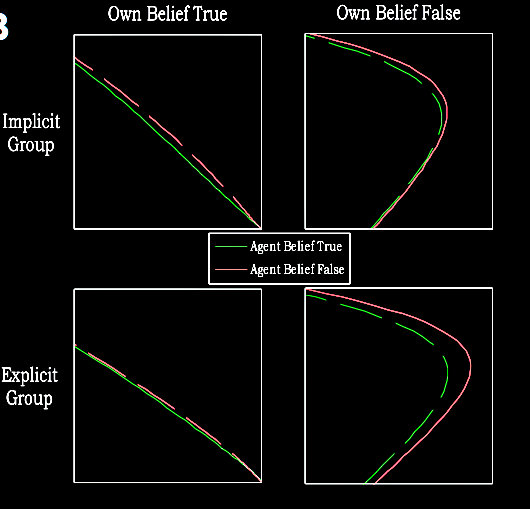

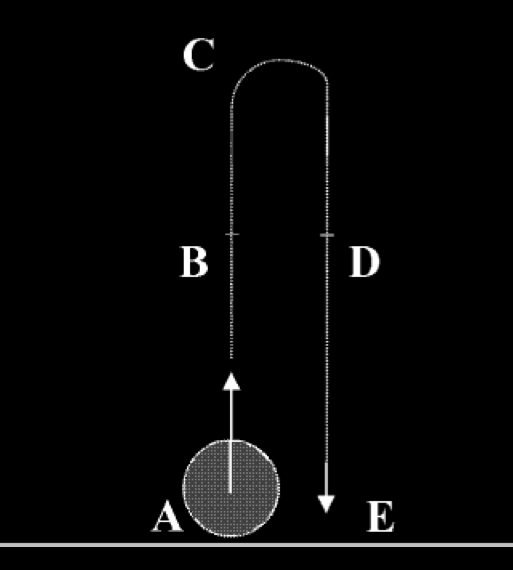

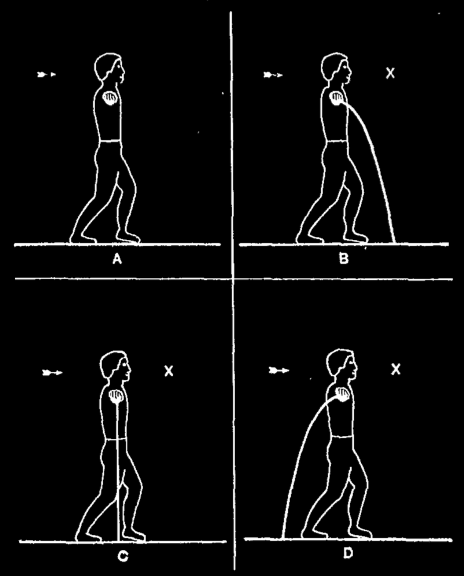

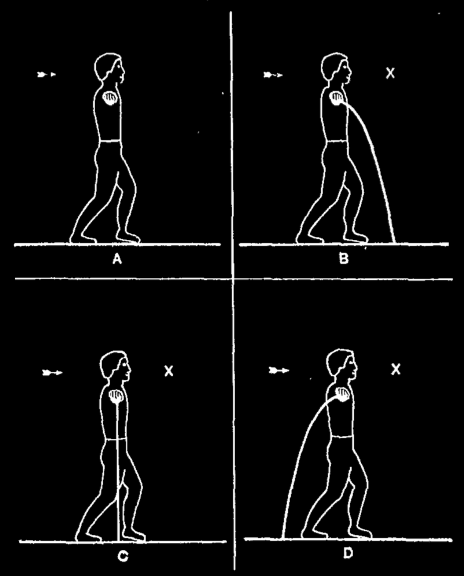

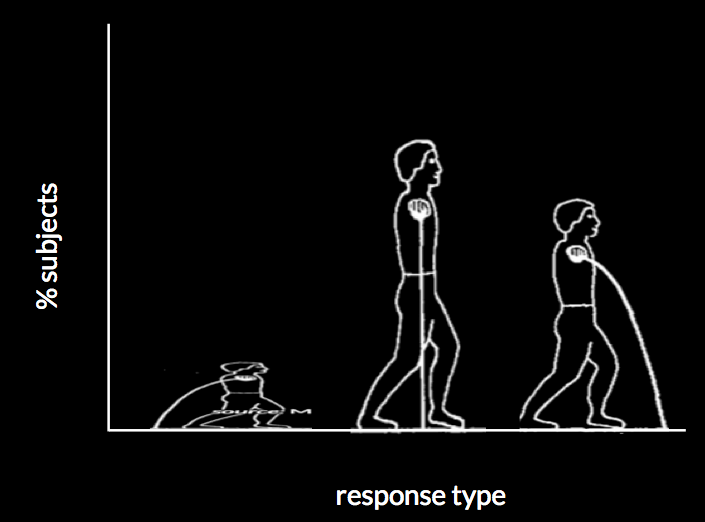

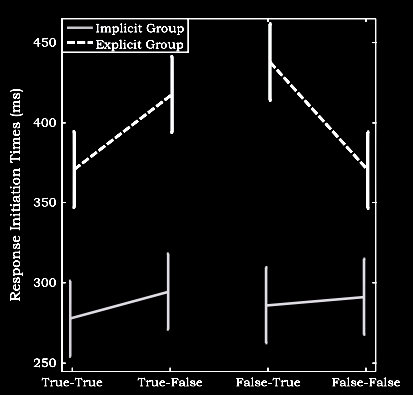

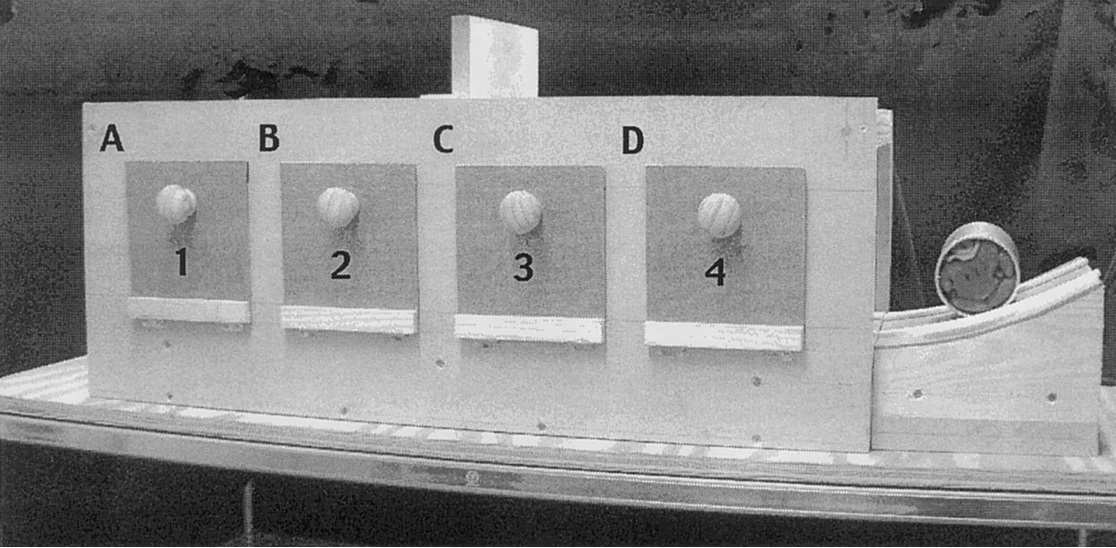

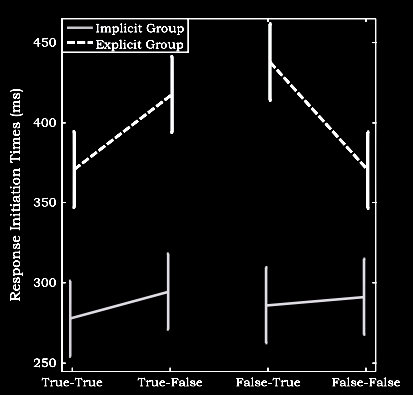

van der Wel et al (2014, figure 1)

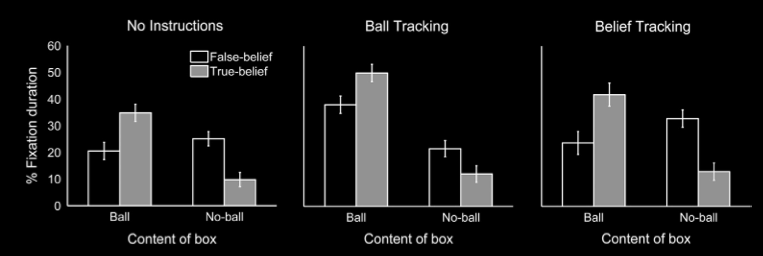

van der Wel et al (2014, figure 2)

van der Wel et al (2014, figure 2)

Some processes involved in tracking others’ beliefs are automatic.

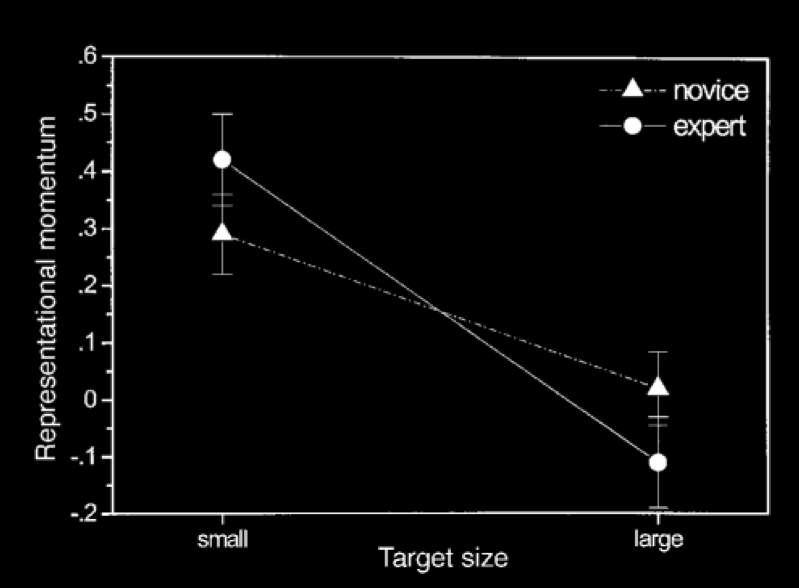

van der Wel et al (2014, figure 3)

‘they slowed down their responses when there was a belief conflict versus when there was not’

Some processes involved in tracking others’ beliefs are automatic, and some are not.

background assumptions

Verbal responses are typically a consequence of non-automatic processes,

differences in looking times are a consequence of automatic processes on some tasks.

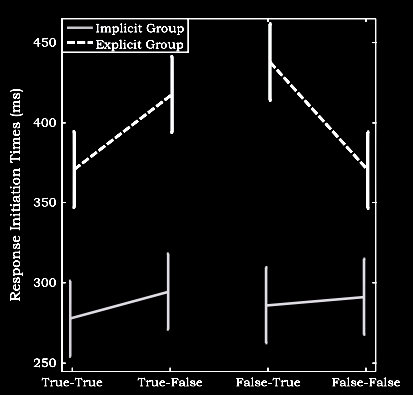

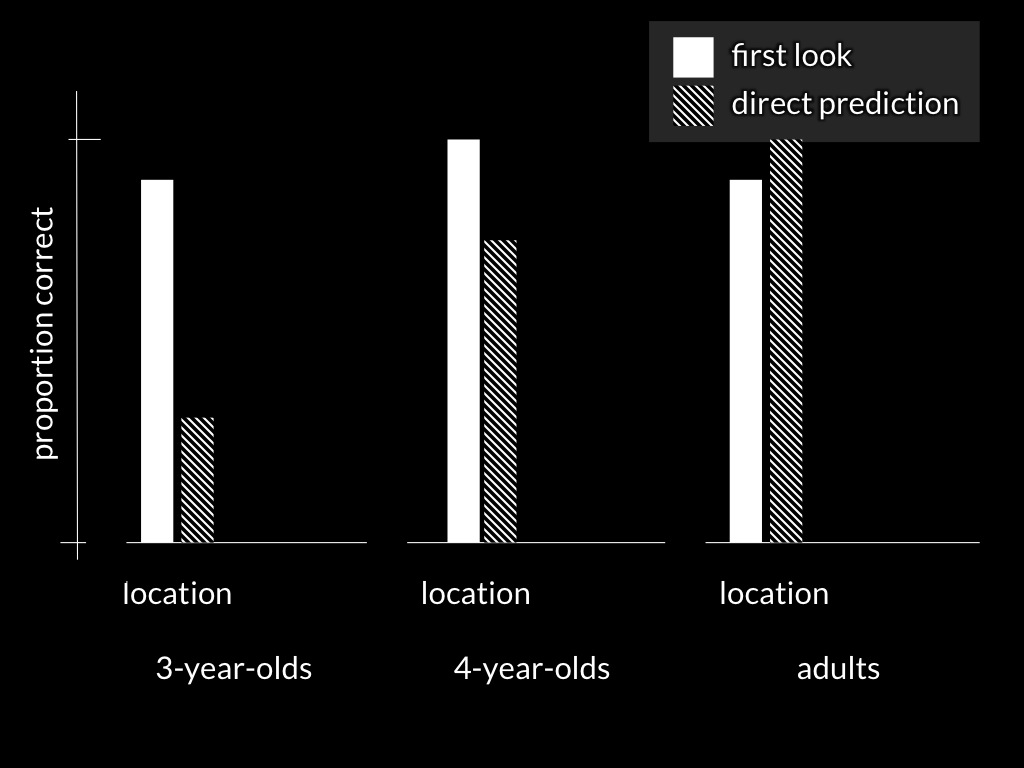

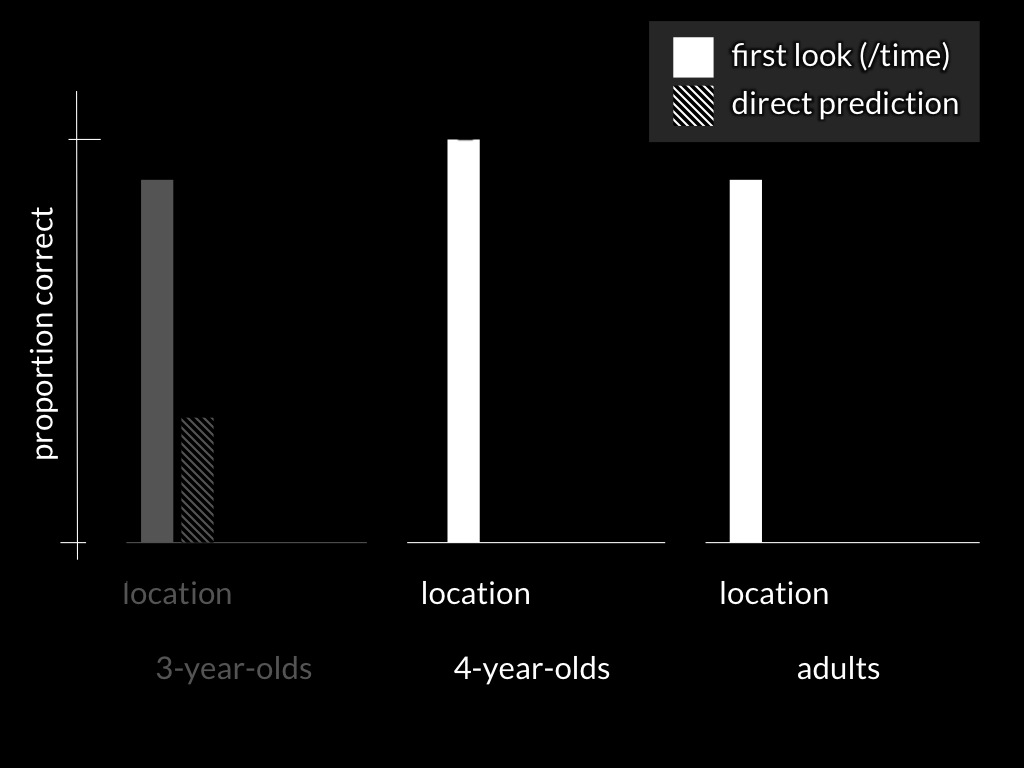

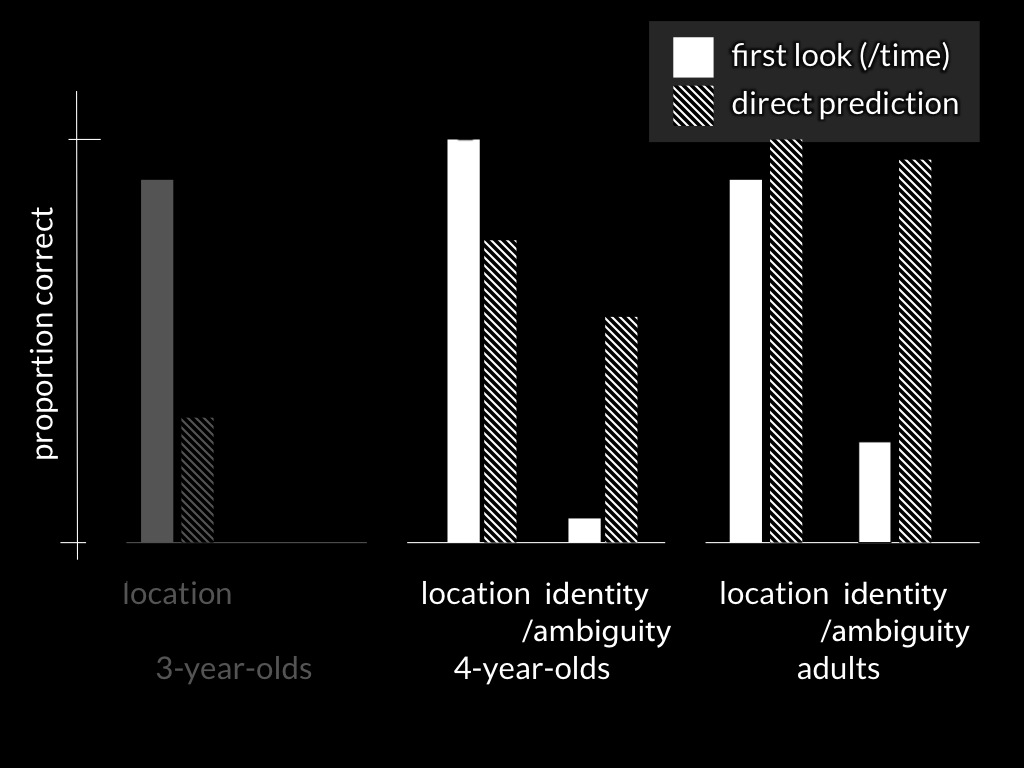

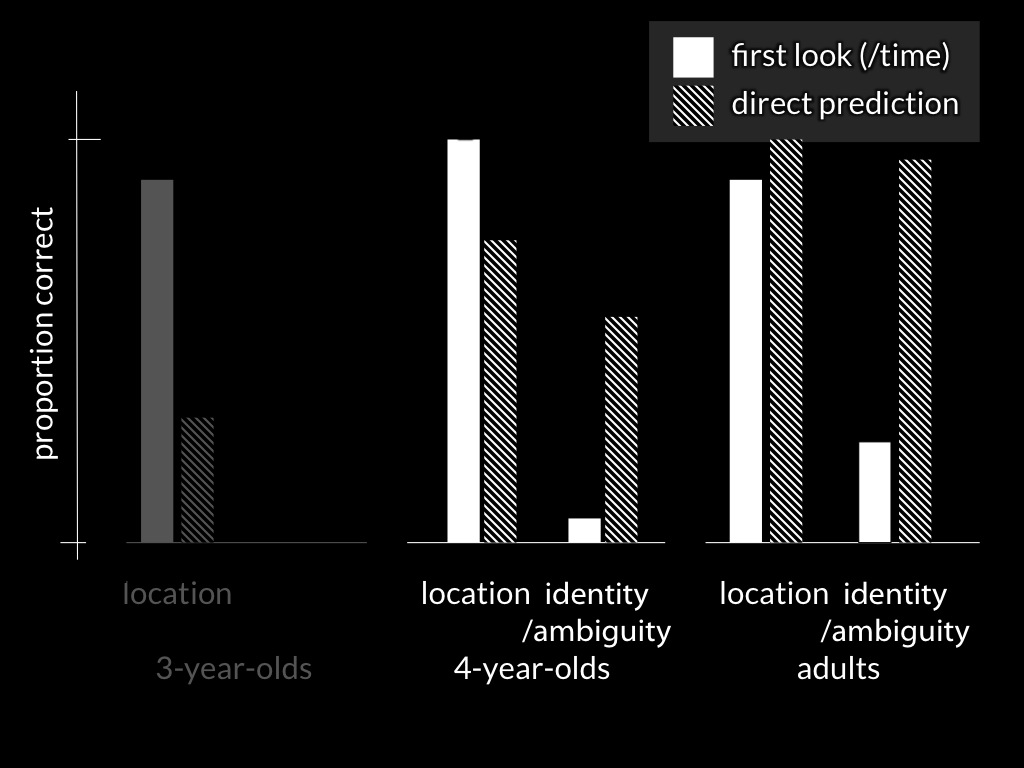

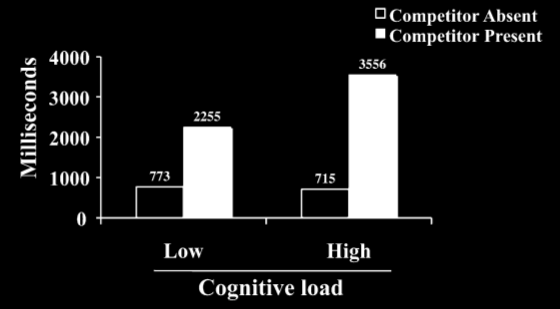

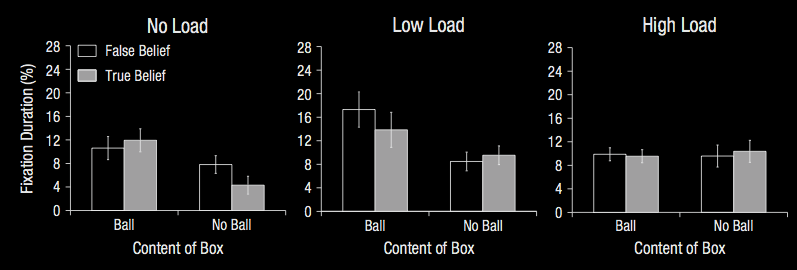

Schneider et al (2014, figure 3)

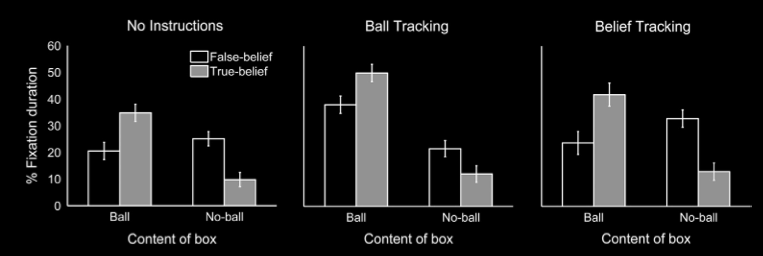

Low & Watts (2013); Low et al (2014)

Low & Watts (2013); Low et al (2014)

Some processes involved in tracking others’ beliefs are automatic, and some are not.

In a single subject on a single trial, different responses can carry conflicting information about another’s belief.

Models

How do mindreaders model minds?

- compare -

How do physical thinkers model the physical?

1

theories of the physical

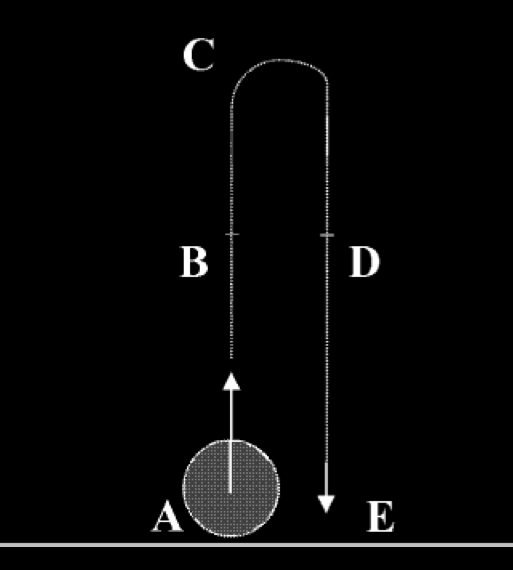

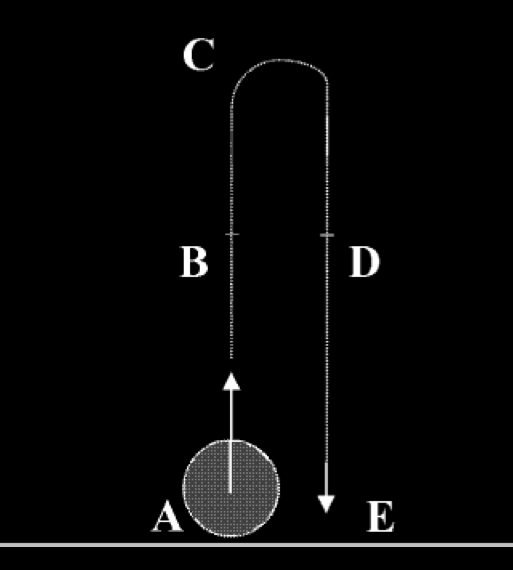

Kozhevnikov & Hegarty (2001, figure 1)

McCloskey et al (1983, figure 1)

2

models of the physical

What is a model of the physical?

entities

e.g.

momentum = mass * velocity

measurement scheme(s)

e.g.

mass measured in grams using real numbers

model != theory

3

signature limits

What model of the physical are you using?

McCloskey et al (1983, figure 1)

drawn from McCloskey et al (1983)

Different people use different models.

signature limits

Hypothesis 1

Response R is the product of a process using a model characterised by Theory 1

Fact

Theory 1 predicts that objects will F.

Prediction of Hypothesis 1

Response R will proceed as if objects will F

Hypothesis 2

Response R is the product of a process using a model characterised by Theory 2

Fact

Theory 2 predicts that objects will not F.

Prediction of Hypothesis 2

Response R will proceed as if objects will not F

What model of the physical are your object-tracking systems using?

Kozhevnikov & Hegarty (2001, figure 1)

Kozhevnikov & Hegarty (2001, figure 2)

Within a person, different processes use different models.

4

efficiency-flexibility trade offs

‘an impetus heuristic could yield an approximately correct (and adequate) solution ... but would require less effort or fewer resources than would prediction based on a correct understanding of physical principles.’

Hubbard (2014, p. 640)

How do mindreaders model minds?

- compare -

How do physical thinkers model the physical?

1

theories

2

models

3

signature limits

4

efficiency-flexibility trade offs

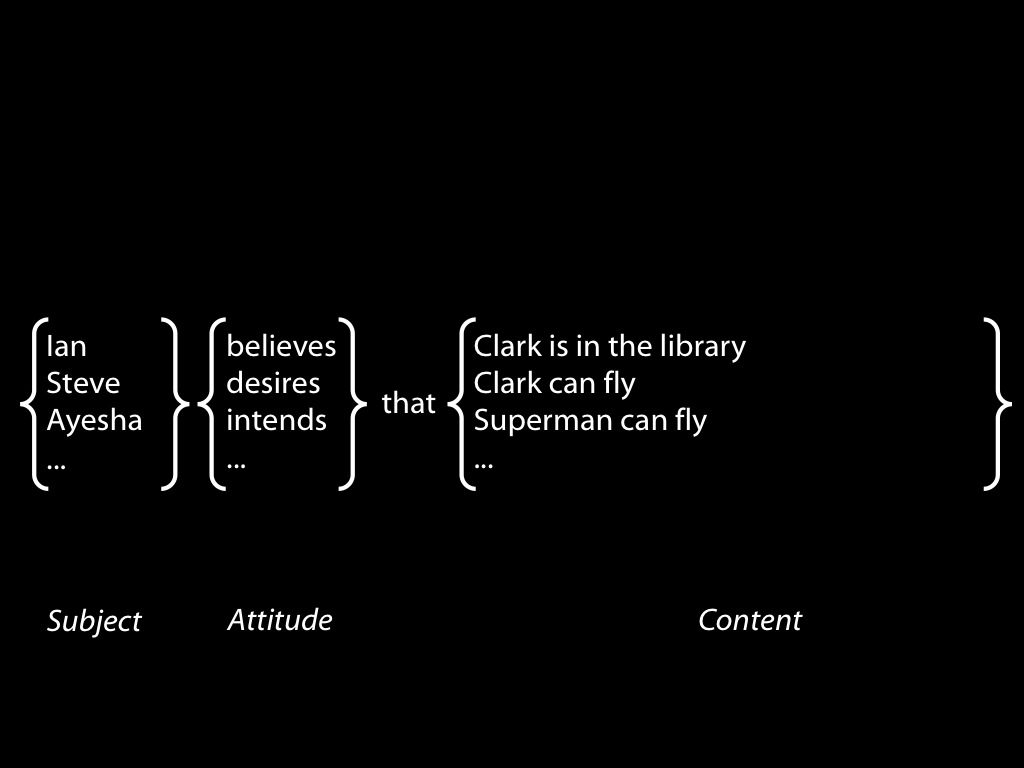

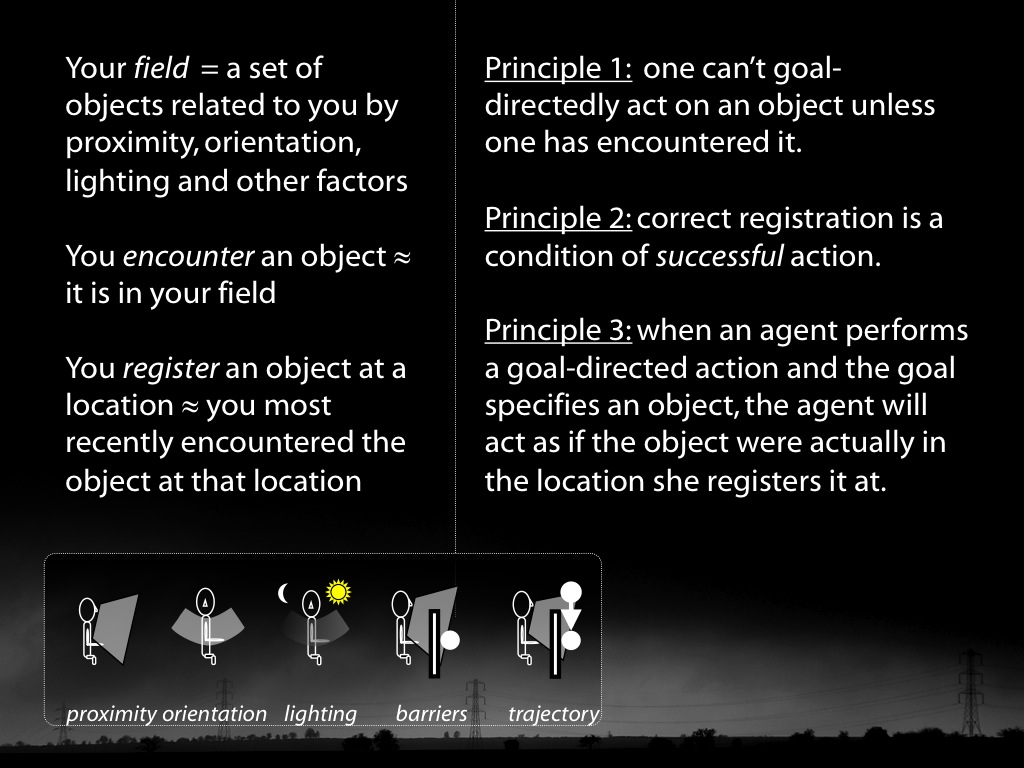

What is a model of the mental?

What is a model of the physical?

entities

e.g.

momentum = mass * velocity

measurement scheme(s)

e.g.

mass measured in grams using real numbers

What is a model of the mental?

attitudes

e.g.

action = belief + desire

content individualtion

e.g.

system of propositions, map-like structures, ...

What makes a model of the mental

- minimal?

- canonical?

How do mindreaders model minds?

There are multiple models of the mental,

mindreading is any process which uses one of these models to track mental states,

and different models provide different efficiency-flexibility trade offs.

Models and Systems

≥ two belief-tracking, mindreading systems

≥ two models of the mental

Constructing minimal theories of mind yields models of the mental which

- could be used by automatic mindreading processes.

- are used by some automatic mindreading processes.

- are the only models used by automatic mindreading processes.

Signature Limits

How can we test

which model of the mental physical

a process is using?

Kozhevnikov & Hegarty (2001, figure 1)

signature limits

of minimal models of mind

- identity as compression/expansion[has been tested*]

- duck/rabbit [has been tested*]

- fission/fusion [is being tested*]

- quantification

measures:

- spontaneous anticipatory looking

- looking duration

- active helping (unpublished data)

van der Wel et al (2014, figure 1)

Constructing minimal theories of mind yields models of the mental which

- could be used by automatic mindreading processes.

- are used by some automatic mindreading processes.

- are the only models used by automatic mindreading processes.

Development

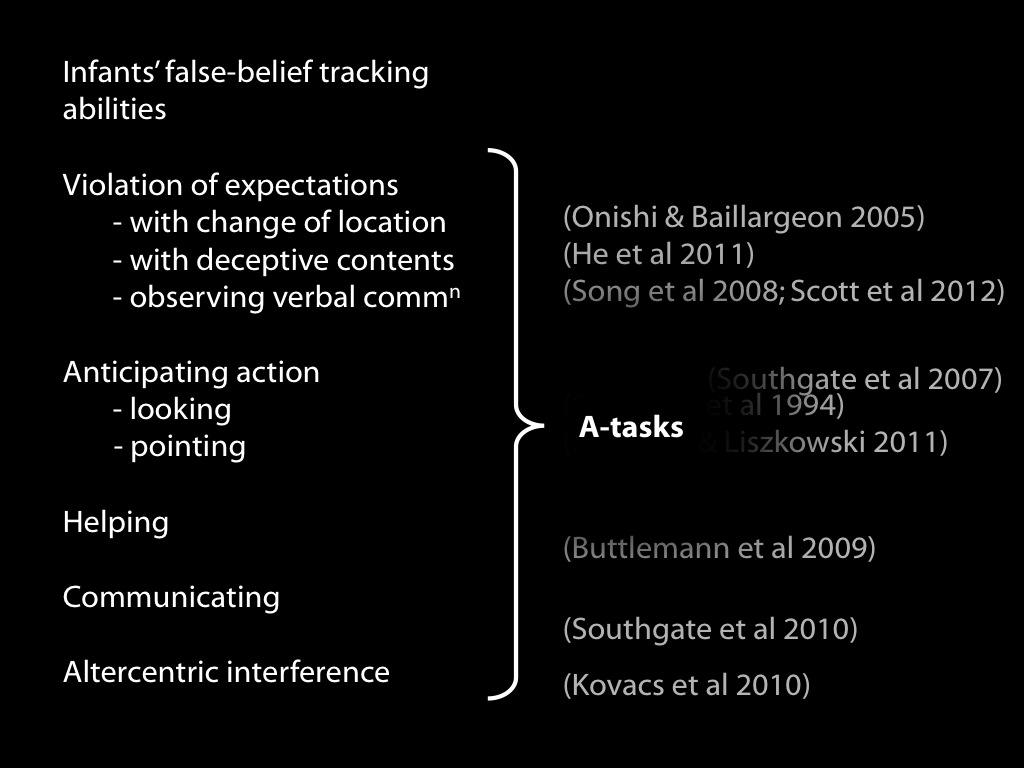

There is evidence that mindreading systems using minimal models of the mental are present in infancy.

Canonical Hypothesis

Infants’ anticipatory looking reflects mindreading processes that use a canonical model of the mental.

Prediction

No contrast between false beliefs about (a) location vs (b) identity, quantification or appearance.

Minimal Hypothesis

Infants’ anticipatory looking reflects mindreading processes that use a minimal model of the mental.

Prediction

The performance contrast exists.

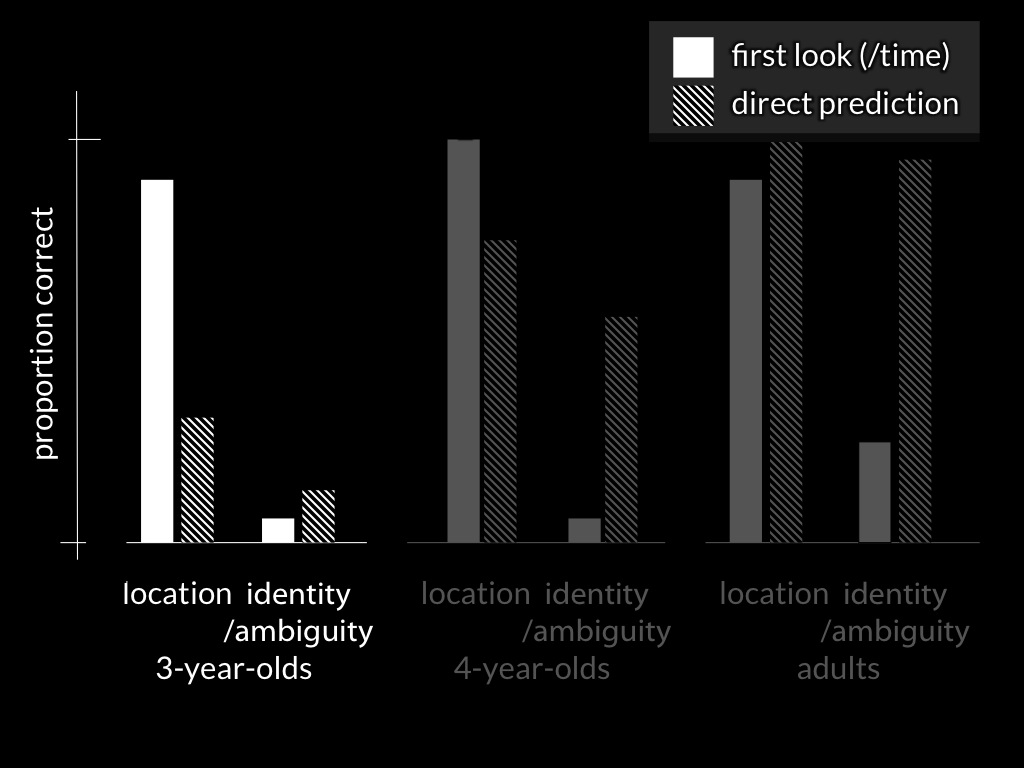

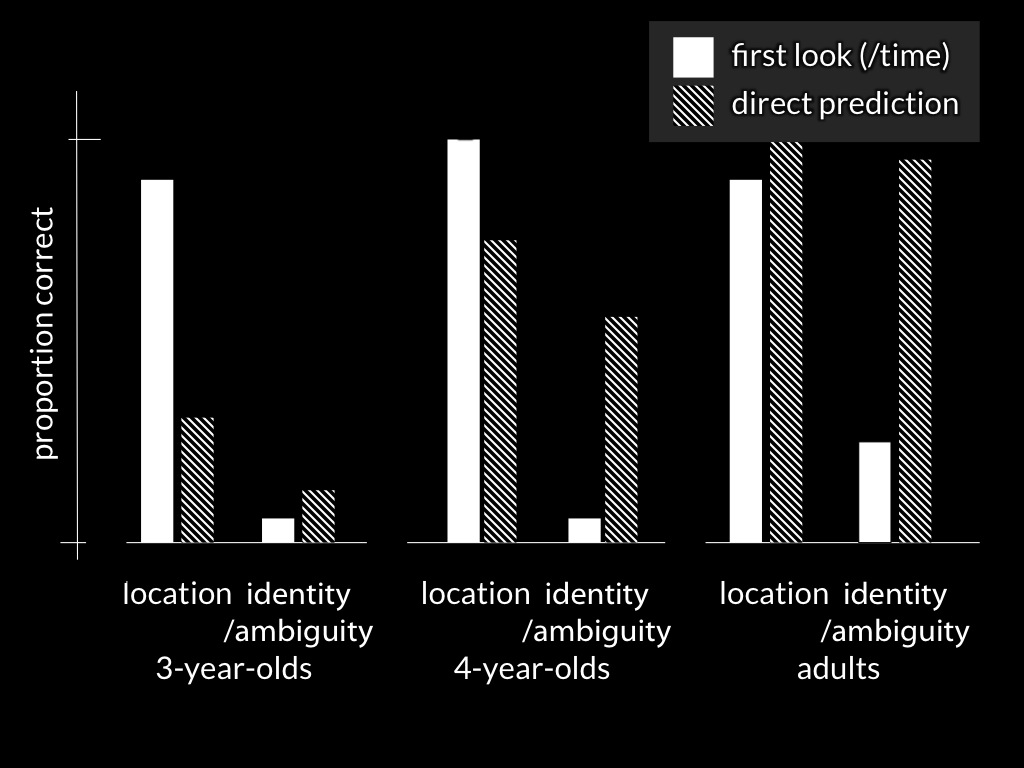

drawn from Low & Watts (2013); Low et al (2014)

drawn from Low & Watts (2013); Low et al (2014)

drawn from Low & Watts (2013); Low et al (2014)

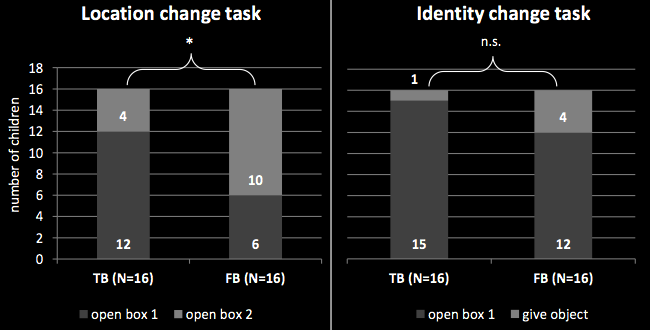

What about other measures, e.g. helping or pointing?

Fizke et al (poster/in preparation, figure 3)

Mindreading systems using minimal models of the mental are present in infancy.

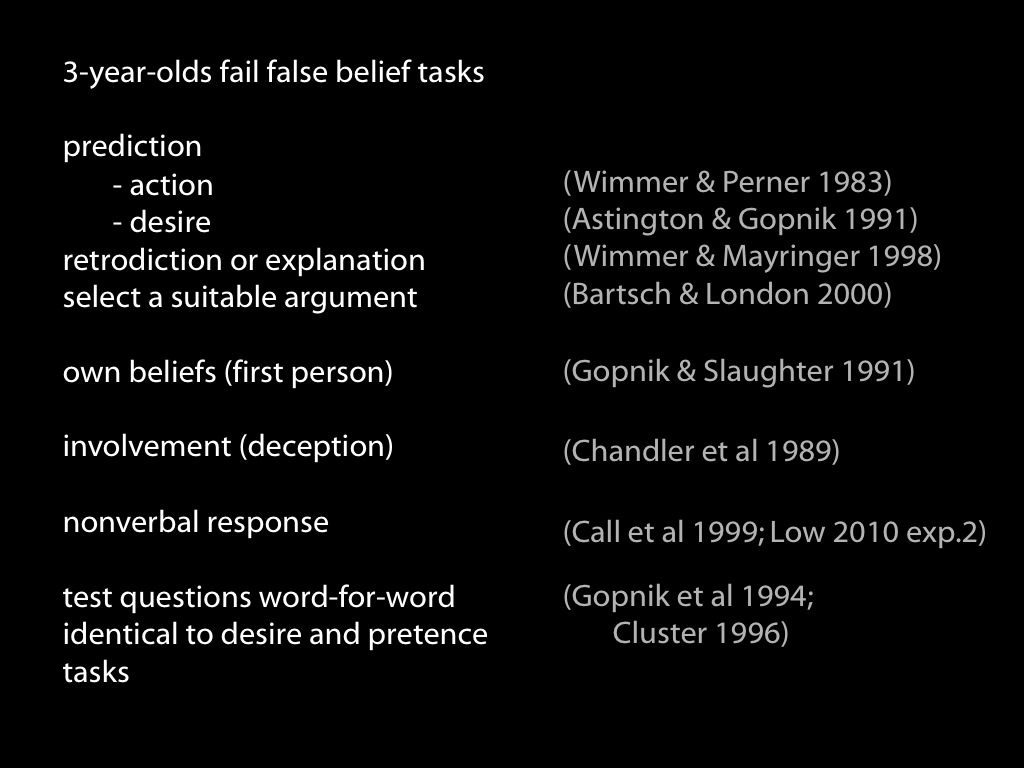

Infants’ only mindreading systems are those which use minimal models of the mind.

Infants have limited working memory, inhibitory control, ...

but using a canonical model is cognitively demanding.

- working memory

- attention

- inhibitory control

- it makes people slow down ...

van der Wel et al (2014, figure 3)

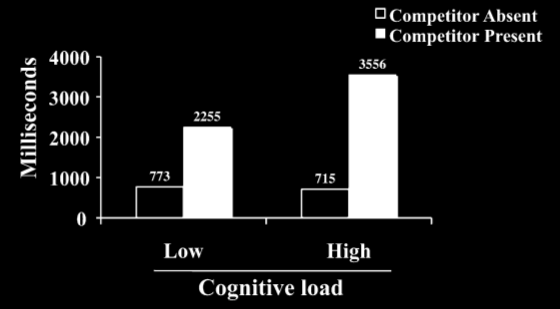

Lin et al (2010, figure 3)

Can puzzling patterns of findings about infant mindreading be fully explained by identifying how infants model the mental?

No.

Can puzzling patterns of findings about infant mindreading be fully explained by identifying how infants model the mental?

No!

Or by distinguishing automatic from non-automatic mindreading?

No!

Hood et al (2003, figure 1)

systems,

models,

what more?

Conclusion

1. systems

There are ≥ two mindreading systems.

2. models

There are ≥ two models of the mental, canonical and minimal.

Mindreading is the use of any model of the mental.

3. models + systems

Automatic mindreading processes use minimal models only.

Minimal vs canonical models allow different flexibility-efficiency trade offs.

4. emergence

0/1-year-olds sometimes use minimal models.

0/1-year-olds always use minimal models.

Automatic mindreading processes in human adults also occur in 0/1-year-olds, scrub jays, chimps, ...

Appendix: Automatic Implies Minimal

Constructing minimal theories of mind yields models of the mental which

- could be used by automatic mindreading processes.

- are used by some automatic mindreading processes.

- are the only models used by automatic mindreading processes.

Some mindreading systems use a canonical model,

and no automatic mindreading processes use a canonical model of the mental.

Automaticity requires (some degree of) cognitive efficiency,

but using a canonical model is cognitively demanding.

- working memory

- attention

- inhibitory control

- it makes people slow down ...

Schneider et al’s puzzle about looking times.

Schneider et al (2012, figure 2); Schneider et al (2014, figure 2)

van der Wel et al (2014, figure 3)

Lin et al (2010, figure 3)

Some mindreading systems use a canonical model,

and no automatic mindreading processes use a canonical model of the mental

Therefore

Different mindreading systems use different models of the mental.