Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

Perceiving Mental States?

[email protected]

How do you know about it? ・ it = this pen ・ it = this joy

percieve indicator, infer its presence

- vs -

percieve it

[ but perceiving is inferring ✓ ]

Aviezer et al (2012, figure 2A3)

verbal reports and ratings? No!

(Scholl & Tremoulet 2000; Schlottman 2006)

contrast:

percieve indicator, infer its presence

- vs -

percieve it

‘We sometimes see aspects of each others’ mental lives, and thereby come to have non-inferential knowledge of them.’

McNeill (2012, p. 573)

challenge

Evidence?

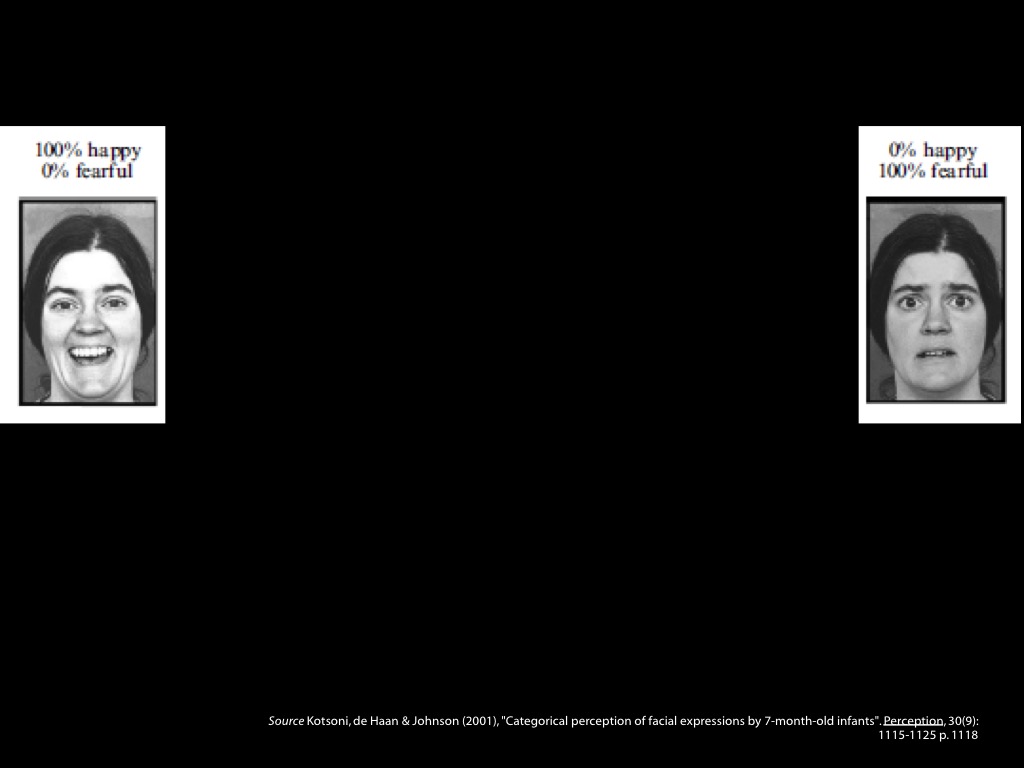

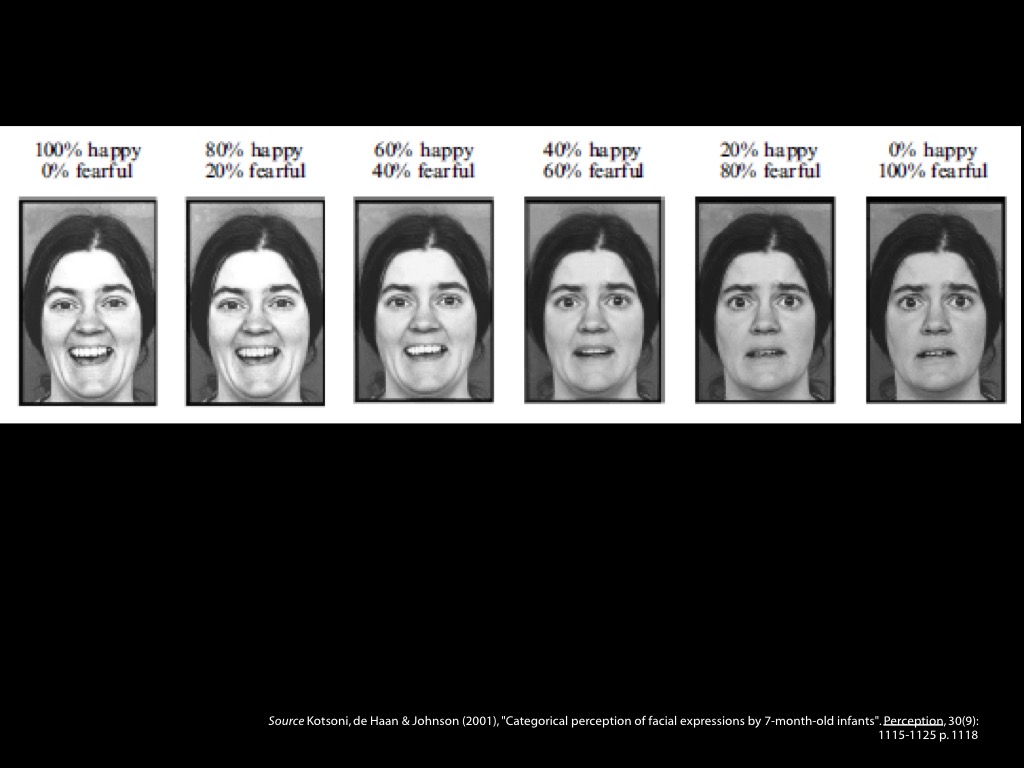

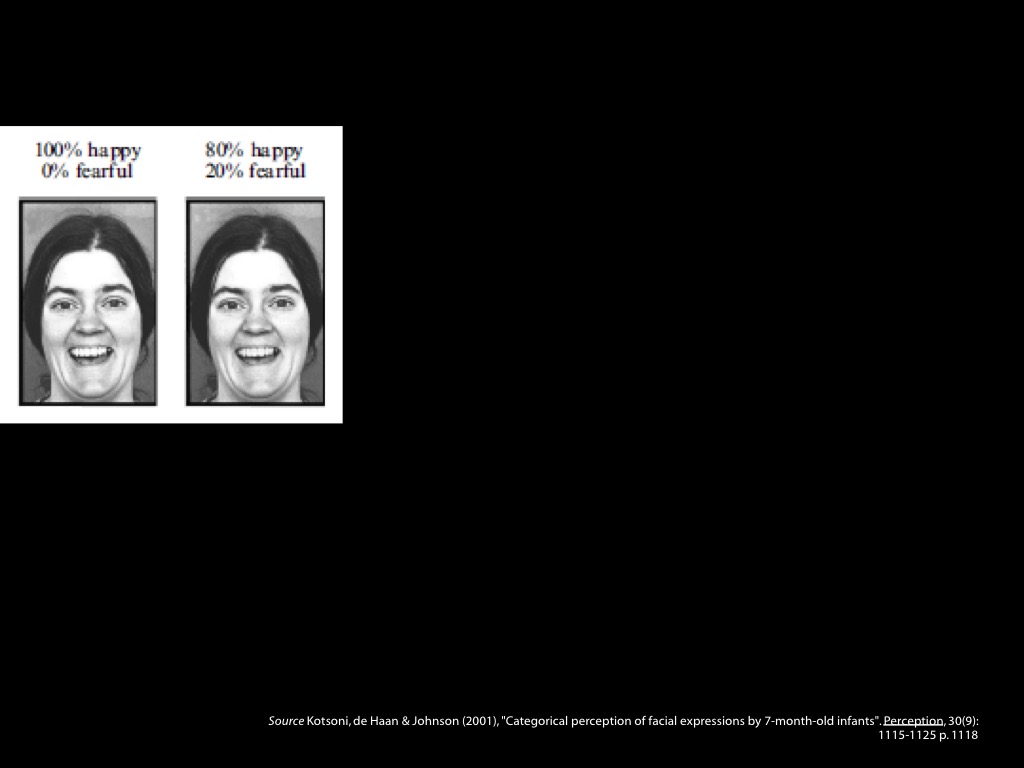

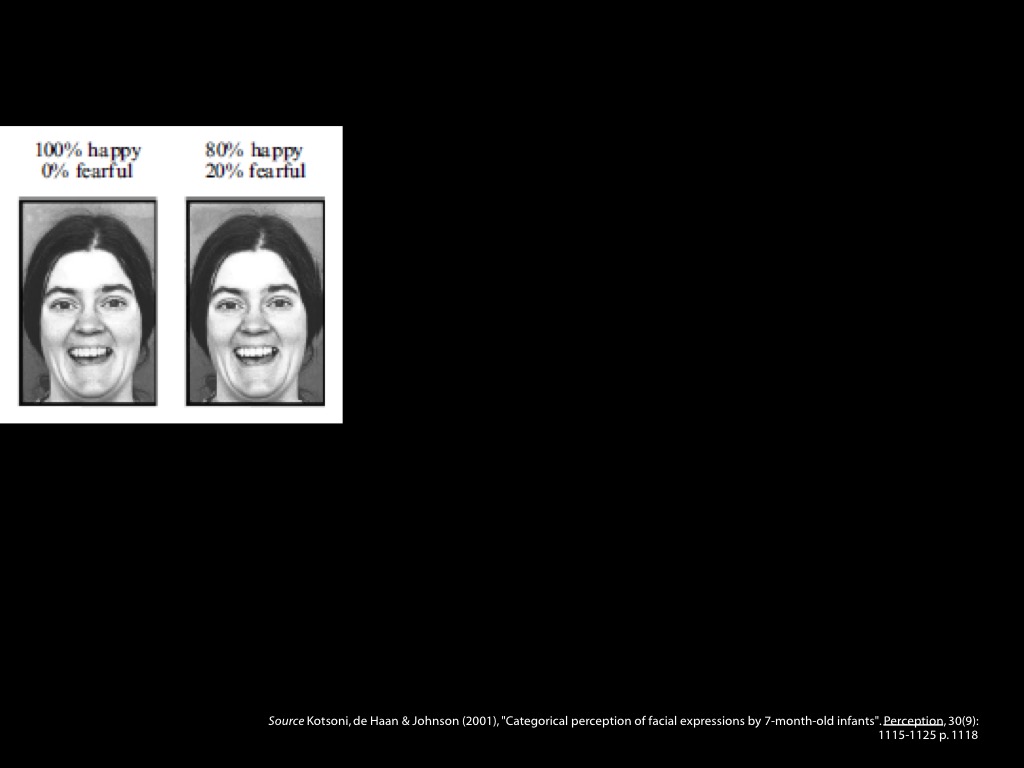

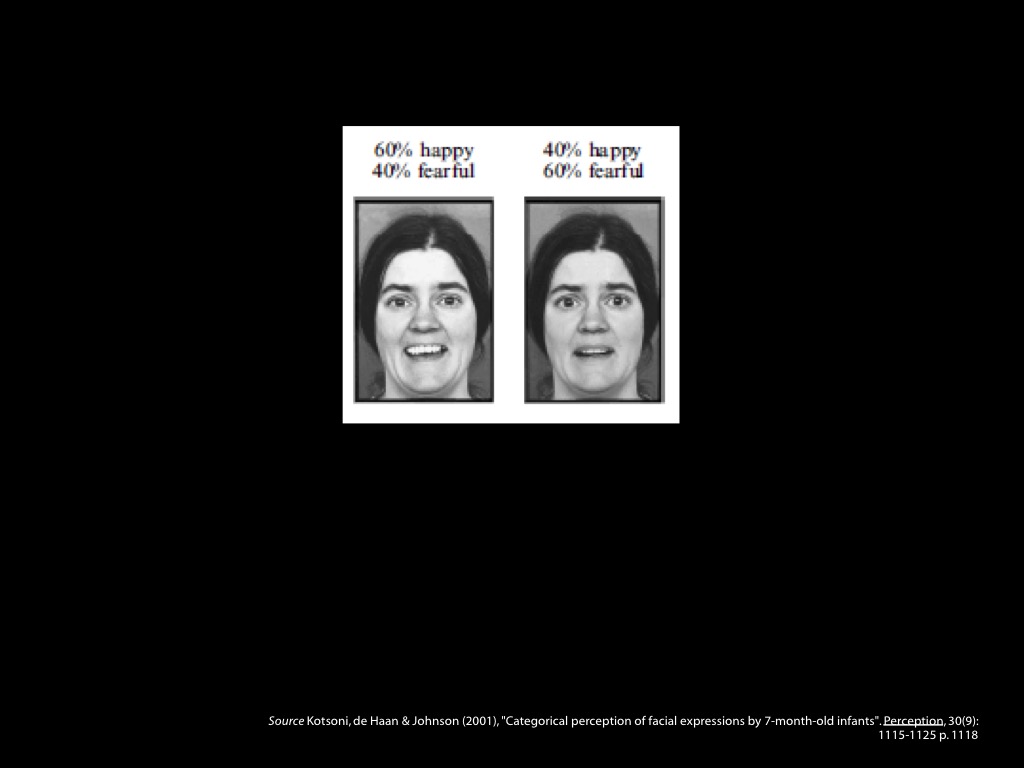

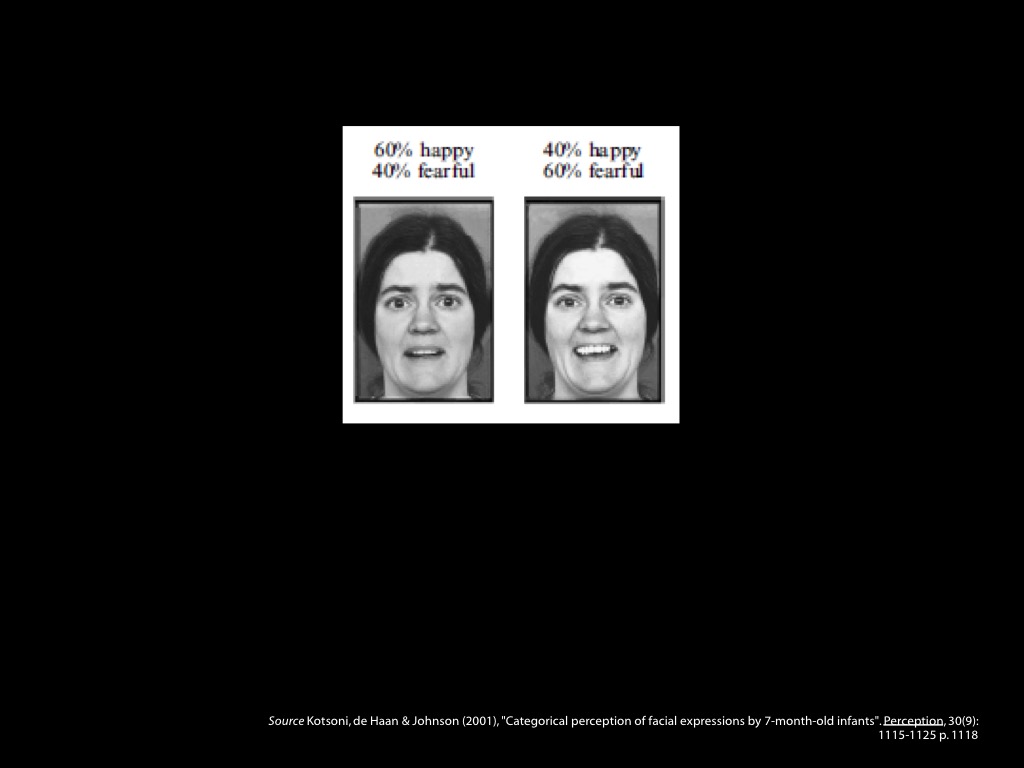

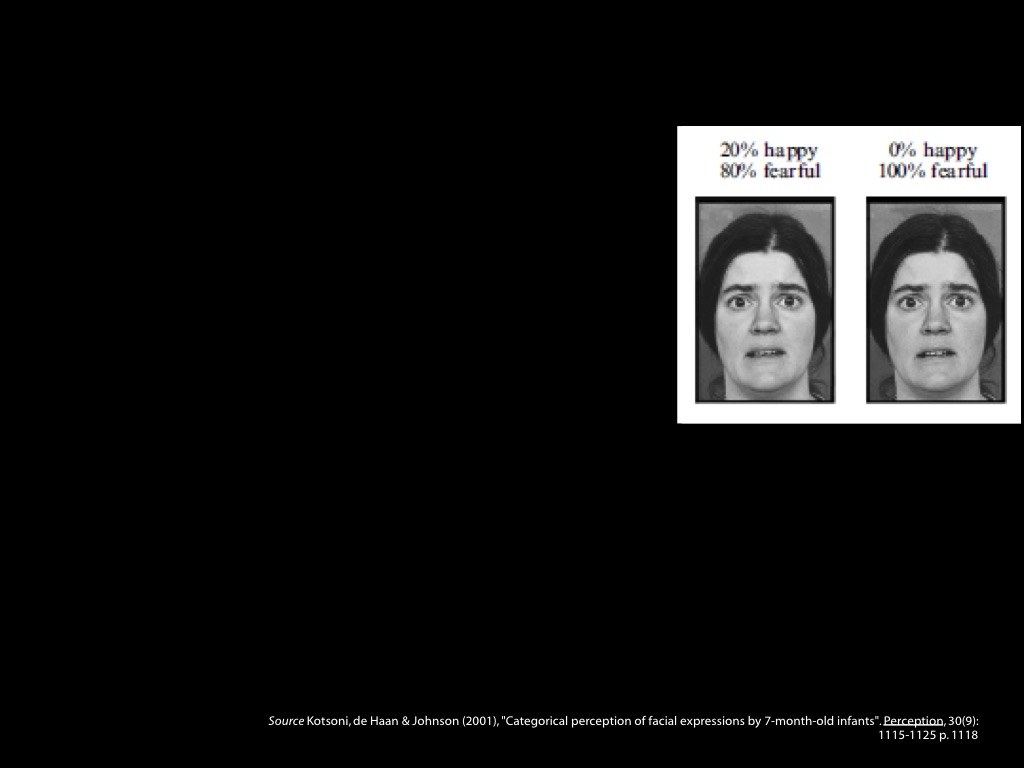

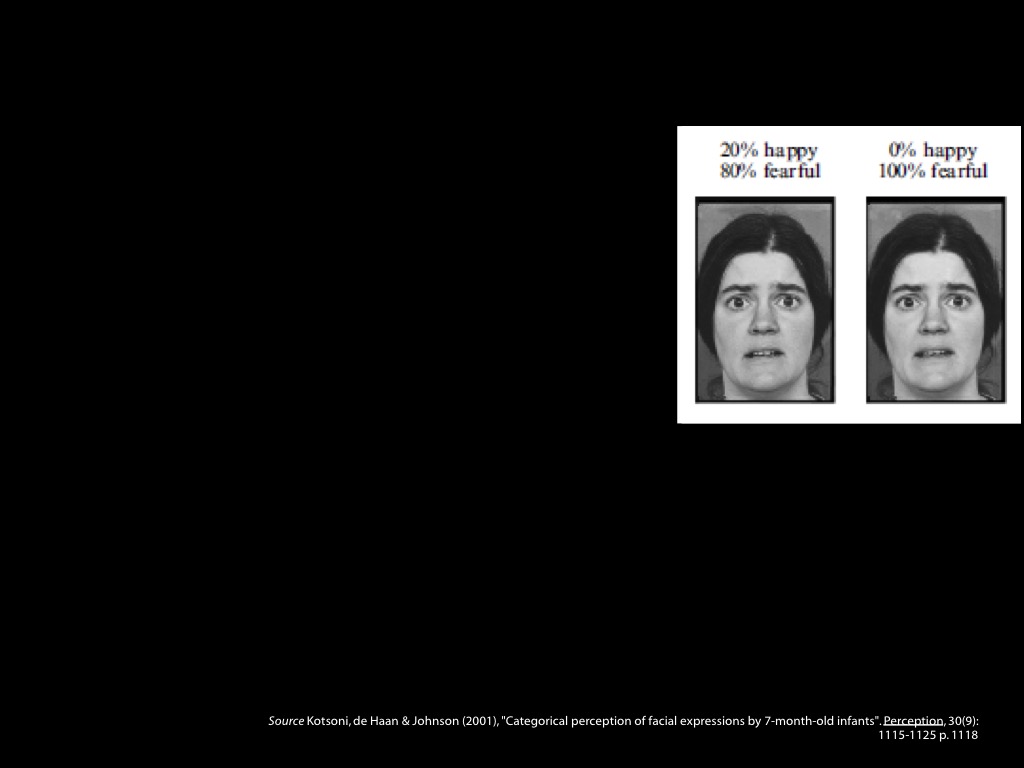

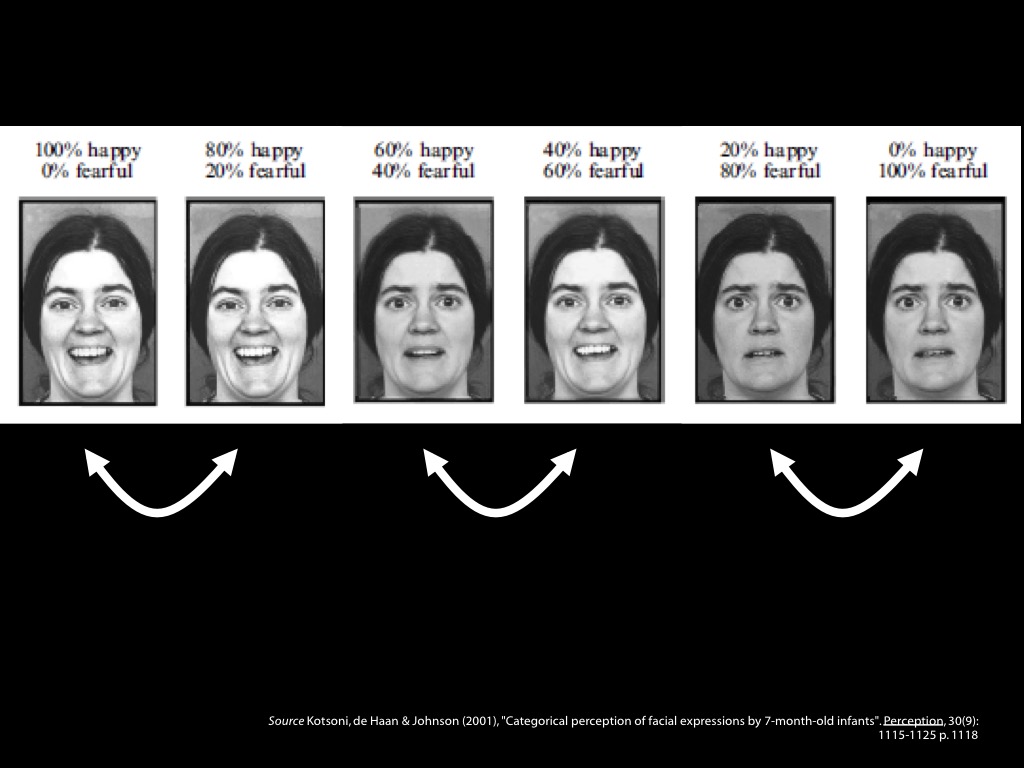

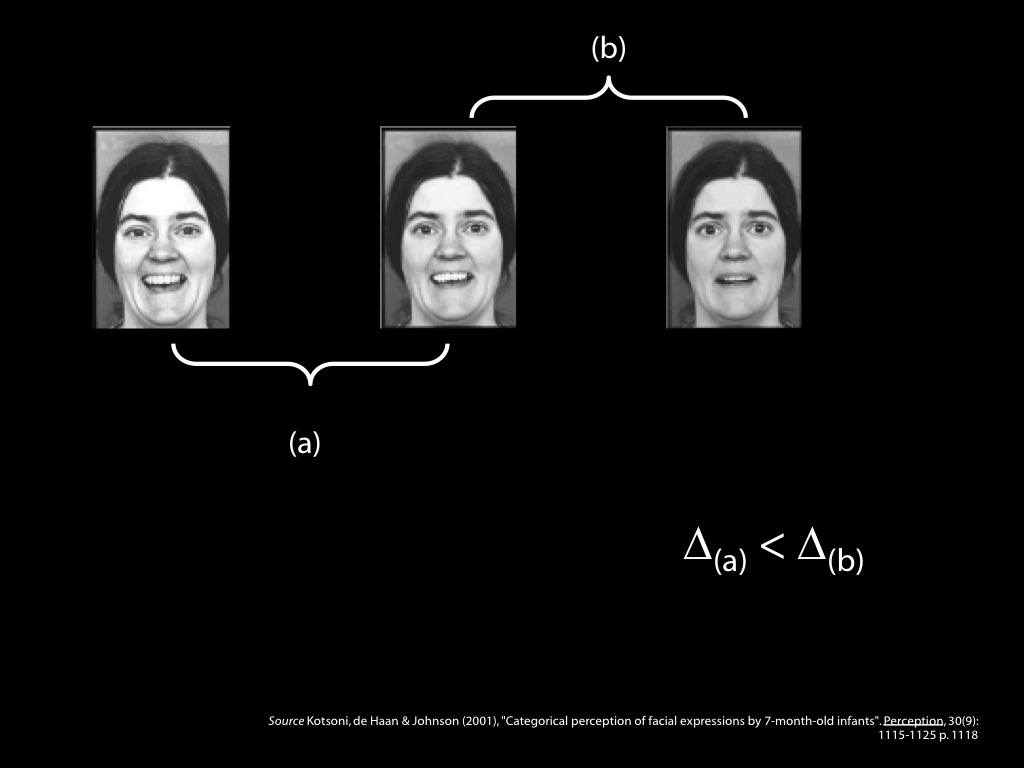

Categorical Perception & Emotion

2.5B

7.5BG

2.5BG

fix initial system of categories

measure disciminatory responses

observe between- vs within-category differences

exclude non-cognitive explanations for the differences

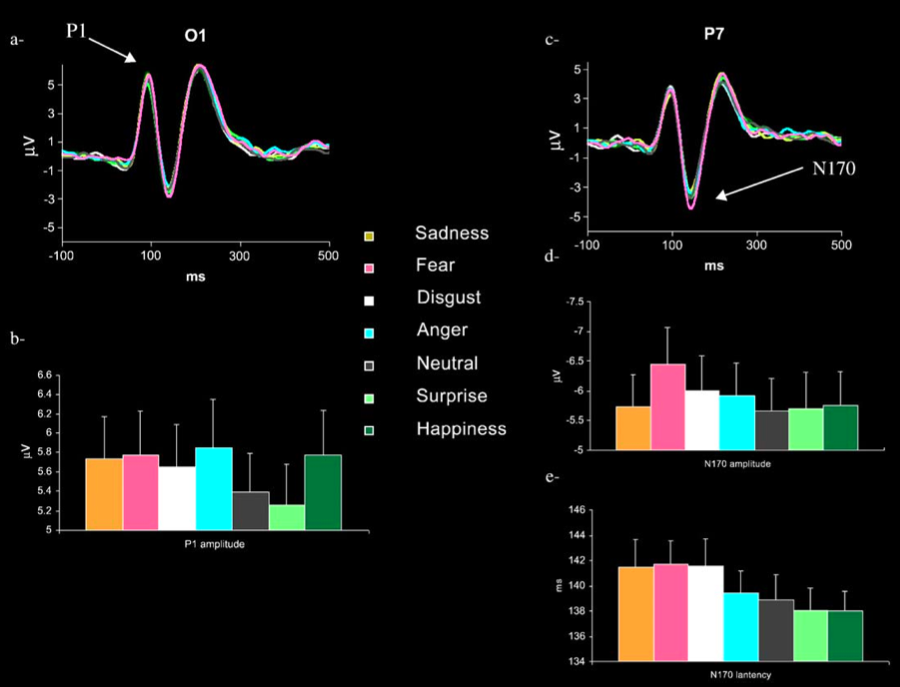

Batty & Taylor (2003, figure 2)

Perceptual?

At least for fear & happiness

- ERP (Campanella et al 2002)

- visual search : behavioural (Williams et al 2005)

‘We sometimes see aspects of each others’ mental lives, and thereby come to have non-inferential knowledge of them.’

McNeill (2012, p. 573)

challenge

Evidence? Categorical Perception!

1. The objects of categorical perception, ‘expressions of emotion’, are facial expressions.

so ...

2. The things we perceive in virtue of categorical perception are not emotions.

The Objects of Categorical Perception

What are the perceptual processes supposed to categorise?

Aviezer et al (2012, figure 2A3)

Aviezer et al's puzzle

Given that facial configurations are not diagnostic of emotion, why are they categorised by perceptual processes?

... maybe the aren’t.

speech perception

articulation of phoneme

expression of emotion

- communicative function

- communicative function

- isolated acoustic signals not diagnostic

- isolated facial expressions not diagnostic

- complex coordinated, goal-directed movements

- complex coordinated, goal-directed movements

What are the perceptual processes supposed to categorise?

Actions whose goals are to express certain emotions.

- The perceptual processes categorise events (not e.g. facial configurations).

- These events are not mere physiological reactions.

- These events are are perceptually categorised by the outcomes to which they are directed.

modest hypothesis about perceptual experience of emotion

Information about others’ emotions can faciliate categorical perception of their expressions of emotion,

which gives rise to phenomenal expectations concerning their bodily configurations, articulations and movements.

‘We sometimes see aspects of each others’ mental lives, and thereby come to have non-inferential knowledge of them.’

McNeill (2012, p. 573)

challenge 2

Evidence? Categorical Perception!

Which model of the emotions?

conclusion

How do you know about it? ・ it = this pen ・ it = this joy

percieve indicator, infer its presence

- vs -

percieve it

[ but perceiving is inferring ✓ ]

‘We sometimes see aspects of each others’ mental lives, and thereby come to have non-inferential knowledge of them.’

McNeill (2012, p. 573)

challenge 1: evidence?

challenge 2: Which model of the emotions?

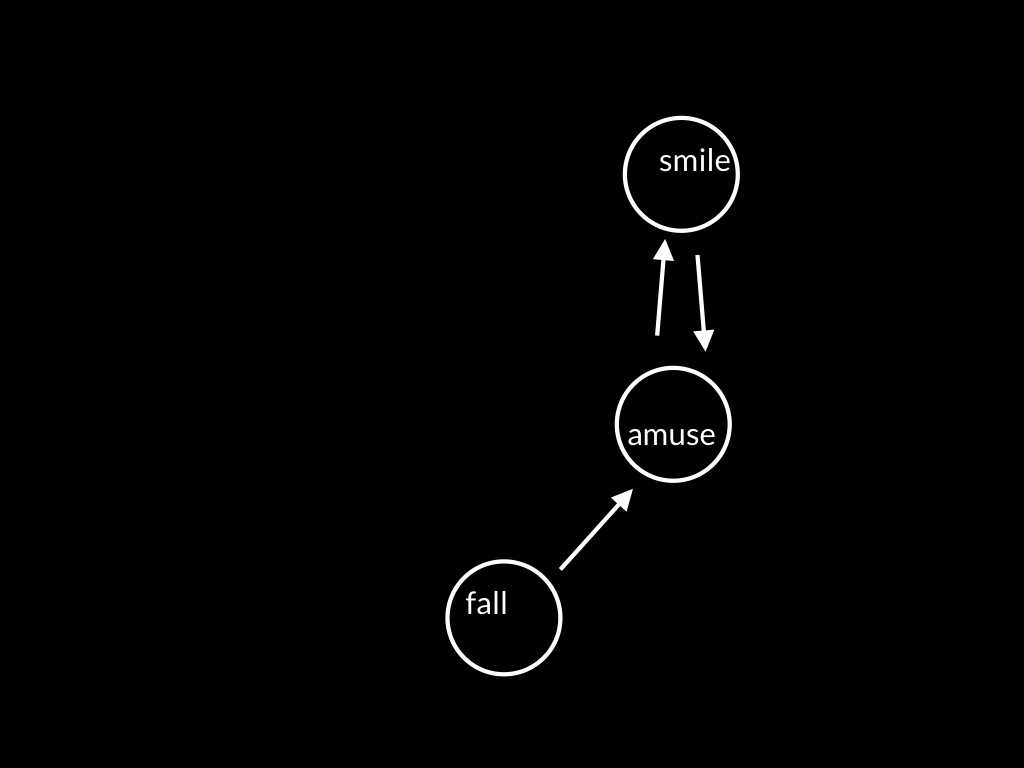

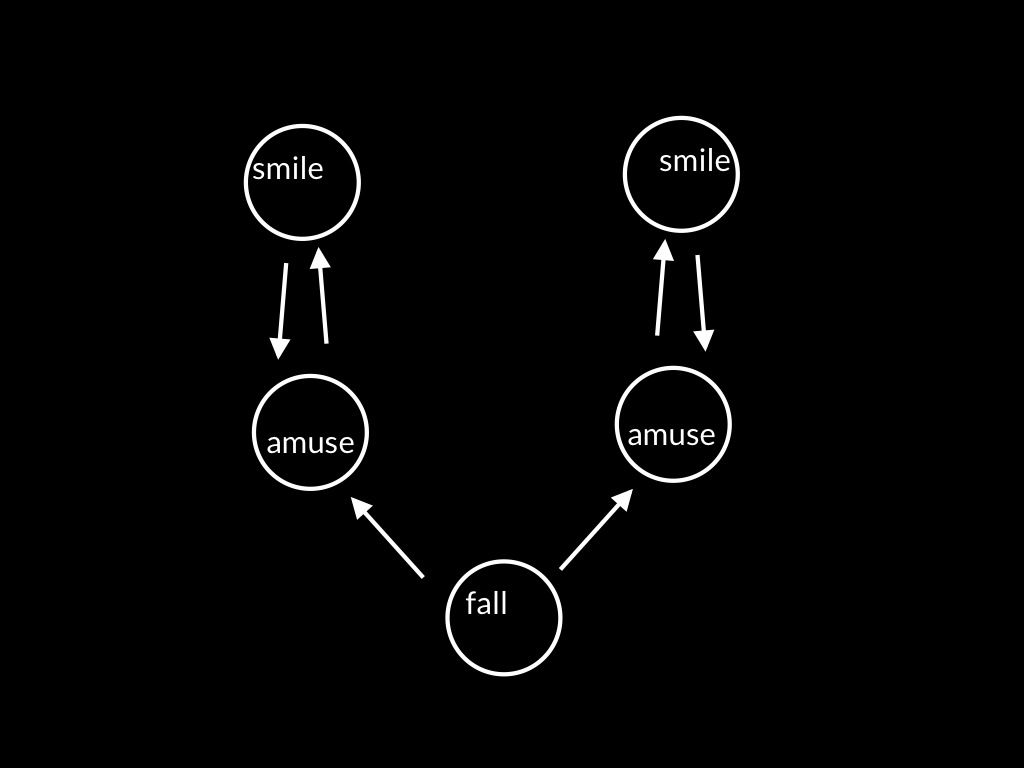

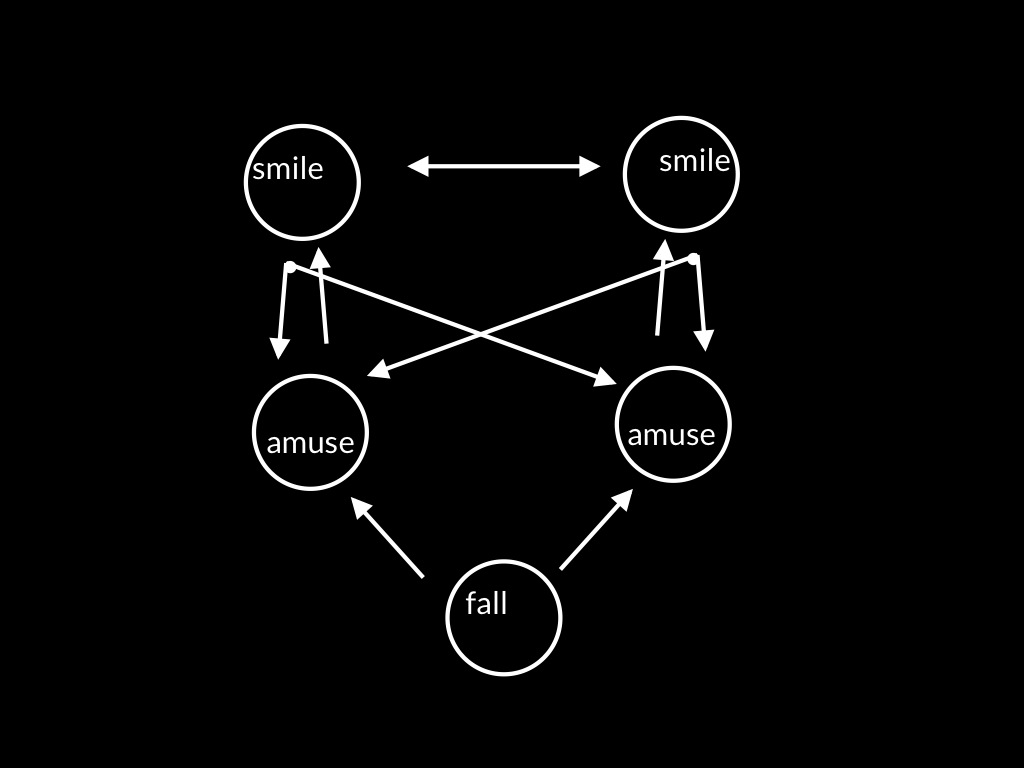

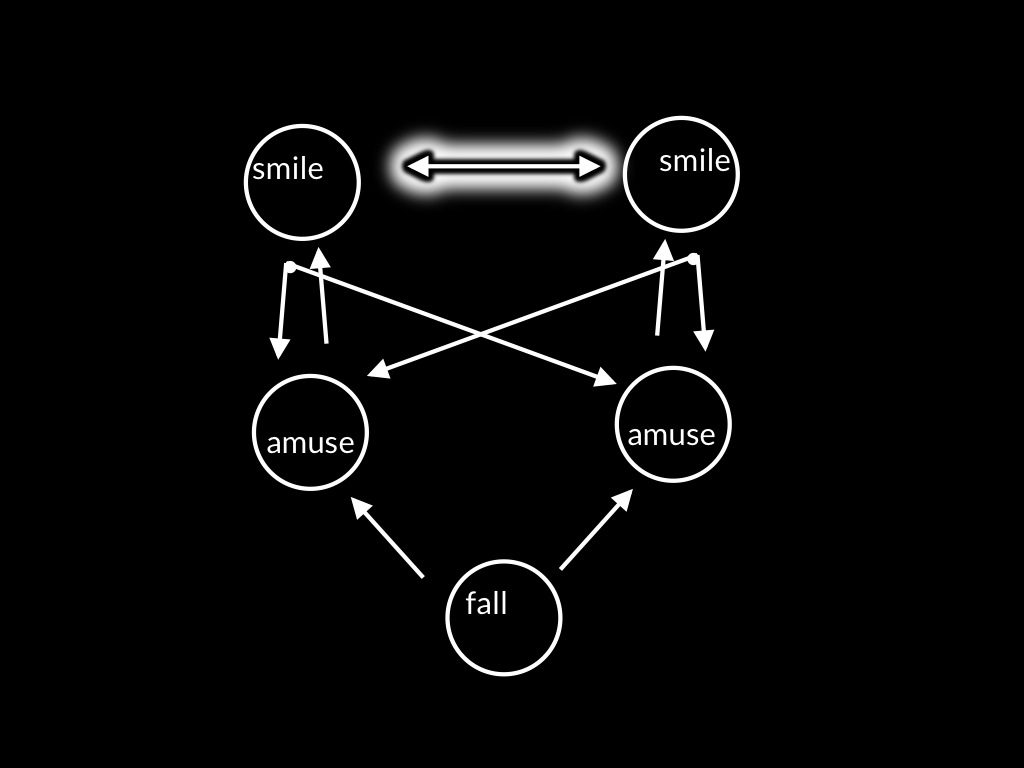

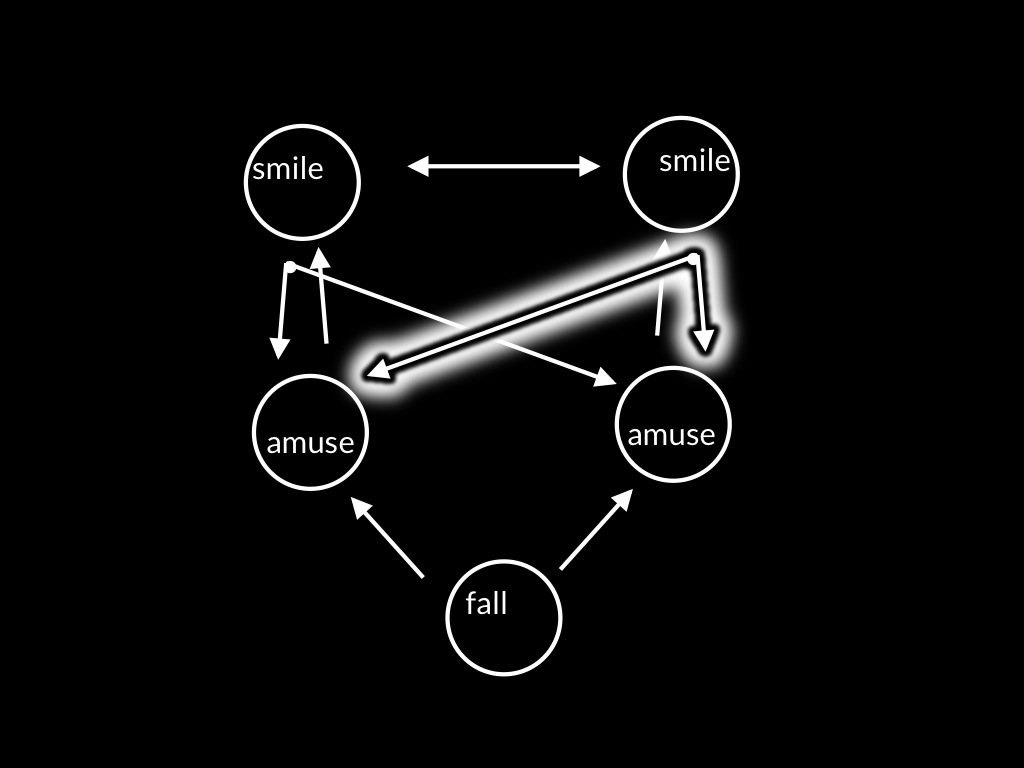

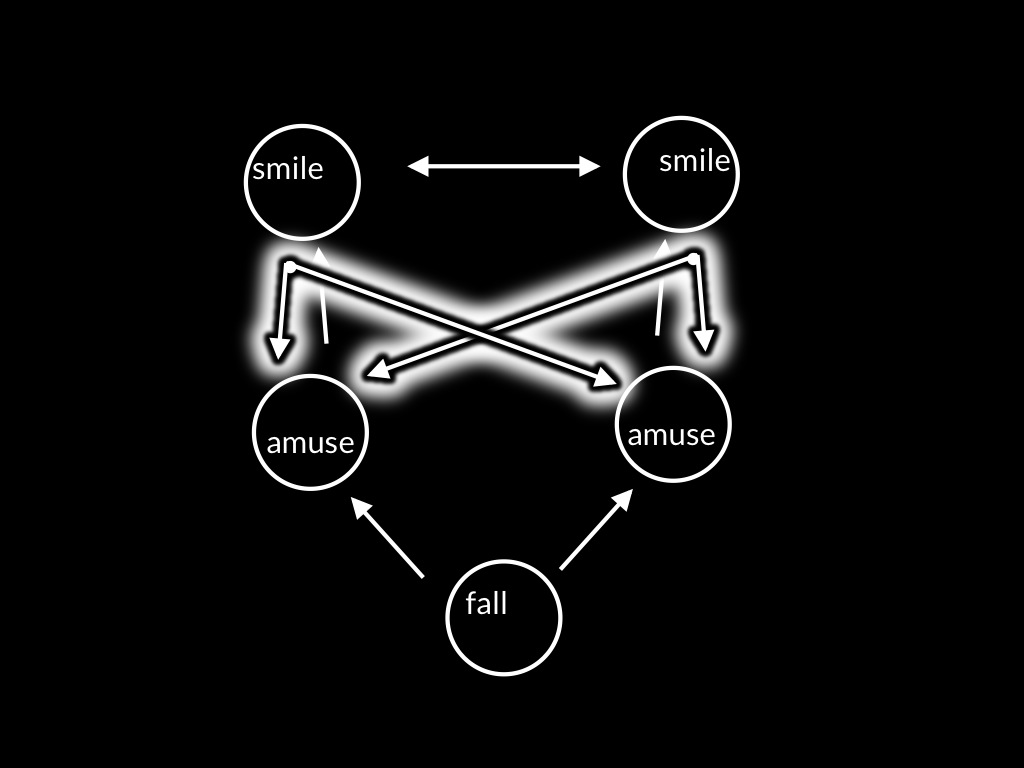

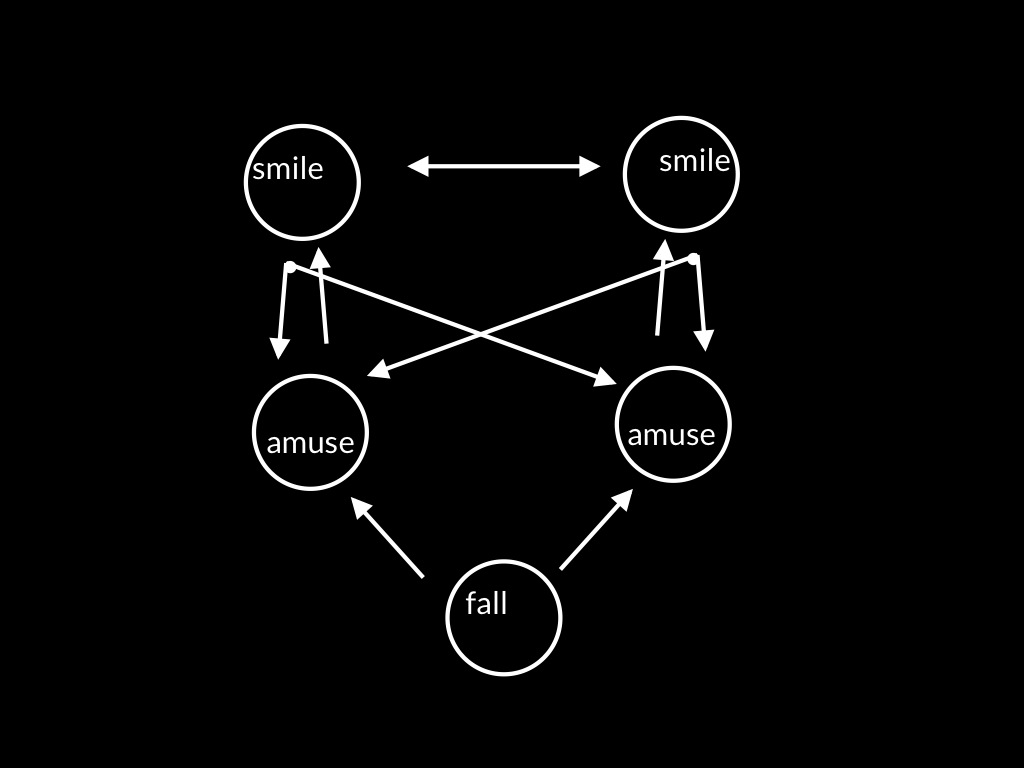

Sharing Smiles

sharing a smile

summary so far

- emotions unfold over time

- control is a way of knowing

- smiling is sometimes a goal-directed action, the goal of which is to smile a smile

collective intentionality opens a route to knowing others’ minds